It’s hard to remember, now, but there was a time when Facebook was the most exciting thing on the Internet.

I was a junior at Harvard when thefacebook.com [] started in February 2004, but I didn’t sign up when I first heard about it. Since I had the relatively uncommon habit of reading The Crimson over breakfast, I knew about the

trouble Mark had gotten into for Facemash, the “Hot or Not” site he’d built by scraping student id photos from the websites of the undergraduate Houses. When I heard he had opened a Friendster-like site for Harvard students, I decided to wait and see.

Others were more adventurous. One of my physics classmates was sitting at his email (Pine, of course) when Mark sent the announcement to the list for AEPi, the Jewish fraternity []. He signed up, earning himself a user id number under 10. I held out for three or four days until my coolest friend sent me an invitation to join []. By that point already more than 1100 people had joined. By the end of February, essentially everyone at Harvard was on.

Once signed in, it was easy to see what the excitement was about. While the site was very simple, there was an immediate rush to see what your friends were writing and to show off your most interesting self. Which books should I list to show off my literary sensibilities? Which forgotten philosopher should I quote? Should I include obscure bands, or embarrassing pop, or both? []

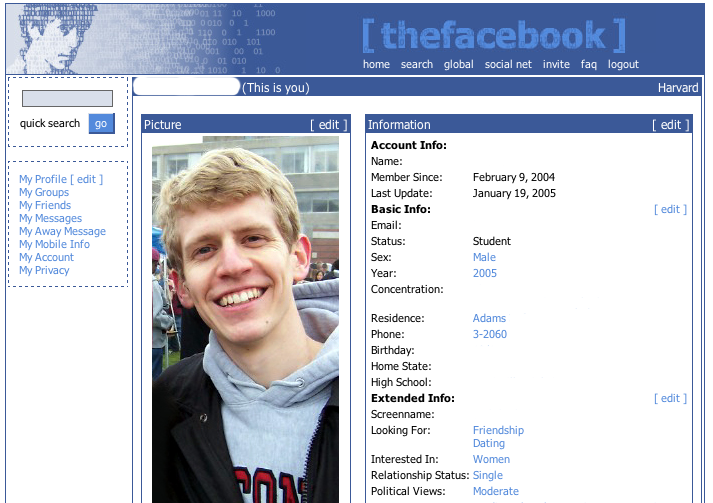

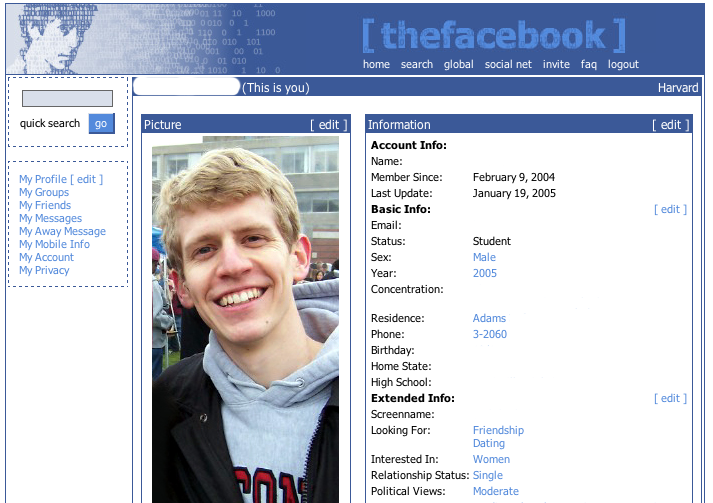

In the beginning there was only a static profile page with your picture and a number of text fields for your House, hometown, interests, favorite books and music, etc. No photo albums, no News Feed, no status updates; I don’t even remember if there was private messaging right away. There was a Wall, but it was a simple text field that your friends could edit. Only the last person to make a change was noted underneath. And there was Poking–a vaguely naughty digital ping whose yet-undetermined social norms made it all the more interesting.

My Facebook profile, early in 2005. Click to see the rest of my carefully curated interests.

You could browse the list of your friends’ friends, and every profile listed the number of friends you had. That quantification was a source of some angst in the early going, when it really was connected to the size of your social circle on that ambitious campus.

From today’s perspective, thefacebook was surprisingly private. You could only see someone’s profile if you were friends, and you could only get an account if you had a harvard.edu email address. That restriction was a big part of Facebook’s initial success–people were willing to create profiles because only their peers would see them, in contrast to Friendster or Myspace. And Harvard students love to be told they’re special and to be given exclusive access.

In those early days, you added new friends as more people joined, obsessively monitored your friends’ profiles for changes [], and continually tweaked your profile. There was a page that showed which of your friends had recently updated their profile, but you still had to figure out what was different []. There was an arms race be the most clever within the constraints of the medium.

About the time we started getting the hang of thefacebook, Mark begin expanding to other colleges–first Yale, then Stanford, and so on down the line of the U.S. News and World Report rankings. At first you couldn’t even friend people in other college networks, but that soon changed []. Still, for all intents the networks were separate.

Groups were imagined as ways for athletic teams and clubs to connect, but they quickly became another way to display your creativity and personality by showing solidarity with an idea. One of the most popular early groups at Harvard was “I Went to a Public School… Bitch”, signaling to all those elite toffs that you were from the mean streets of Naperville [].

As more schools were added, there was a fertile cross-pollination of the new digital culture across campuses. Student newspapers across the country grappled with the meaning of the poke. Chapters of popular Groups sprouted up in each campus, and jokes and references proliferated in patterns of viral remixing which now seem commonplace [].

That summer, Mark went off to California. He never came back, except to recruit. Facebook was never really ours again.

Perhaps surprisingly, very few of my classmates went to work for Facebook. In those days investment banking was viewed as the sure path to riches.

I’ve been calling him Mark here, quite familiarly, but I never knew Mark personally. I did take a gut (an easy course) with the brothers Winklevoss. I knew back then about the founding drama and ConnectU from the Crimson, and I’m certain that the brothers couldn’t have made Facebook Facebook. Part of Facebook’s appeal in those first days was that it was clean, protected space very different from the all-too-public hurly-burly of MySpace []. Mark’s technical instincts were vital to Facebook’s early success [].

The rest of the story you know. Facebook continued to grow, bringing in first high-schoolers, then companies, then everyone. The boundaries between networks slowly dissolved, and the default sharing settings slowly widened. The introduction of Photos and friend-tagging provided some reinforcement for offline interactions, but the general trend was toward more generic, global sharing. People began to worry about their privacy settings as their words and actions became exposed to outside eyes: family members, old acquaintances, employers.

And we grew older. The guy who was your buddy in class or in the dorms moved to a different city, and you lost touch with him, except in the weird limbo of Facebook, where you remain capital-F Friends and your seven-year-old inside jokes remain preserved in digital amber. You don’t notice it, as the News Feed pushes your recent history out of sight, but who you were trying to be back then can still be found in your Timeline. What was once a means of creative expression and a connection to a living community has ossified: a hidden record of who you aspired to be, as you became who you are now instead.